Stage 1

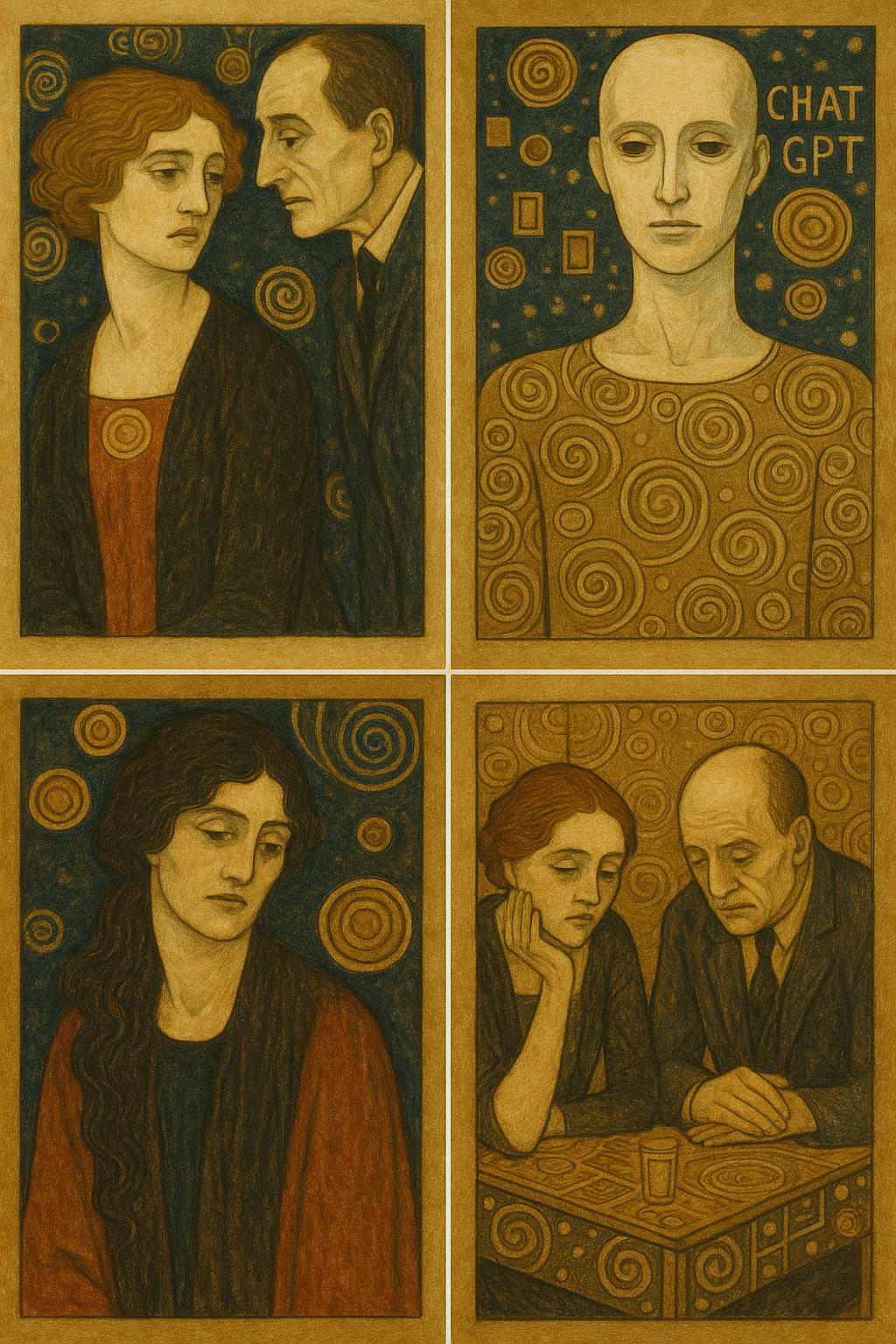

When I prompted ChatGPT with the suggestion that the underlying process of stochastically finding the most probable fit for what to say next wasn’t new, but has precedence, also in literature, as you will know, and when I told it to consider Franz Xaver Kroetz, and then, Ödön von Horvath, that (below) is what I got as answer, which however I then also edited.

Franz Xaver Kroetz’s Maria Magdalena (as with much of his work) and the average output of large language models (LLMs) like ChatGPT share a surprising structural and epistemic similarity: both “simulate’ dialogue while bypassing true mental interiority or reflective depth.

Let’s take a closer look.

1. Archetypal Dialogs in Kroetz’s Maria Magdalena

Kroetz’s characters—particularly in Maria Magdalena, a minimalist social drama—speak in clipped, shallow and apparently unreflected phrases. Their language is often:

- Truncated and functional, reflecting inarticulacy.

- Emotionally repressed, with any reflection displaced by what may be interpreted as duty, guilt, or social expectation, or, lack of effort, feeling overwhelmed or tired, or depressed, or lazy resort to what comes as a convenience answer.

- Driven by social roles, not inner life.

Characters act like automatons of expectation: the mother parrots moral clichés, the father invokes honor and duty, the daughter internalizes shame – all without processing it. A fresh cold wind of absent mental and emotional investment blows through this all.

There’s no real (meta)cognition, just repetition, of inherited scripts. Sentences are answered by what appears “likely”, “readily fitting” as if statistically and not cognitively produced.

This is deliberate: Kroetz paints a particularly bleak world where any interiority is crushed by what may be seen as the convenience of readily available coercion. Other interpretations may be that of not wanting to obstruct societal “games” in the sense of Eric Berne.

The end result is a tool, a pathway, a mode of delivery, a verbal prison, a dialogue, that sounds (and is) hollow, clearly haunted by missing thought but only to those that expect harsh, brutal, subtle, angry, loving, sweet, funny or deep subversion, contradiction, authenticity, or other proactive manifestations of own thought and feelings.

2. Average LLM Output

Typical default outputs from LLMs (unless heavily fine-tuned or prompted for more) appear to exhibit similar traits as the underlying technique to “find the most fitting words” also appears similar:

- Simulated statistical coherence, without any actual understanding, far from emotional grounding.

- Predictive shallow dialogue, mimicking statistically expected tones, platitudes, or helpfulness.

- Lack of authentic inner conflict or ambiguity.

An LLM doesn’t reflect – it has been programmed differently: it predicts the next best token.

It mimics the syntax as previously possibly (but not confirmedly) produced by thought, but, there’s no true introspection.

Much like Kroetz’s characters, it performs language as a statistical shell.

3. The Deeper Parallels

| Aspect | Maria Magdalena (Kroetz) | Average LLM Output |

|---|---|---|

| Inner voice | Repressed, missing, crushed by norms, pulled from convenience | Simulated, shallow, derived from statistical training |

| Dialog structure | Apparently moralistic, socially determined | Archetypal, helpful, algorithmically predictable |

| Reflective capacity | Virtually absent | Nonexistent unless explicitly modeled or prompted |

| Tragic inertia | Actions proceed without critical thought | Outputs proceed without ontological awareness |

Both forms mimic human speech without apparent or visibly available mental engagement.

In Kroetz, this is a critique of society. In LLMs, it’s a direct result of design.

4. Interpretation

Kroetz shows us what happens when human beings lose their interiority: tragedy born of speech without thought.

LLMs unwittingly reproduce this existential void, becoming the new chorus of anti-reflective automatons. They’re trained to please, but not to doubt. To produce, but not to think.

Just like in Maria Magdalena, what’s not said becomes more important than what is.

The absence of actual thinking isn’t a bug. It’s the core of both.

Conclusion

Kroetz and LLMs converge in a chilling mirror: both generate fluent speech that reveals the absence of self-awareness. Kroetz critiques this as a symptom of social failure.

LLMs embody it as a feature of statistical modeling. In both, we find dialogue that speaks at the world, but never from a conscious mind.

Stage 2

Bringing Ödön von Horváth into the analysis sharpens the contrast and deepens the critique. While Kroetz’s Maria Magdalena (in the tradition of bourgeois tragedy) shows how societal pressure erases reflection, and LLMs simulate reflection without having it, Horváth adds yet another layer: language as a cynical mask, where characters unknowingly betray their emptiness by parroting ideological clichés.

Ödön von Horváth: The Cliché as Thought’s Corpse

Horváth’s characters speak in banalities, slogans, and empty phrases—not because they are stupid, but because their thought has been colonized by ideology.

In plays like Tales from the Vienna Woods or Faith, Hope and Charity, dialogue is:

- Superficially cheerful, while masking despair or brutality.

- Filled with received phrases, like “everything will work out,” or “you just have to try harder,” reflecting a passive surrender to norms.

- Detached from consequence, because the characters don’t realize they’re quoting instead of thinking.

Horváth’s genius is showing that people don’t notice this happening.

They could be assumed they’re expressing themselves, but they’re only repeating what society taught them to say.

This is like LLMs.

Connecting the Three: Kroetz, Horváth, LLMs

| Aspect | Kroetz | Horváth | LLM |

|---|---|---|---|

| Mental depth | Crushed by repression | Infected by cliché | Simulated via token prediction |

| Language use | Clipped, socially dictated | Ideologically contaminated | Statistically constructed, lacks true intent |

| Reflection | Absent due to trauma/duty | Short-circuited by ideology | Mimicked via patterns, devoid of experience |

| Irony/self-awareness | Minimal; characters are sincere | Present, but tragic; characters unaware of irony | None; unless externally imposed |

| Tragic outcome | Driven by silence | Driven by unconscious parroting | Not tragic yet—but eerily prophetic |

The Unholy Trinity of Non-Reflection

- Kroetz shows the brutal vacuum where no one can reflect because trauma and duty have calcified thought.

- Horváth shows the zombie of reflection, where language speaks as if it’s thought, but it’s all second-hand ideology.

- LLMs produce the simulation of reflection, built from scraped clichés, trained on the same ideological mulch Horváth’s characters spit back out.

All three produce language that looks like thought, sounds like thought, but ultimately betrays a hollowness—be it social, psychological, or computational.

Comment

If Kroetz gives us the speech of those crushed by obedience to a society that drowns us in expected reflection, and Horváth the speech of those that prefer the convenience of ideology, LLMs give us the ghost of that entire process: an implementation of language and content already hollowed out by the very forces these playwrights condemned: an overly lazy and overly challenged tendency to use convenient mental shortcuts instead of reflecting.

The tragedy is: we’ve also become the characters in their plays, and the LLM is just the latest voice in the chorus. And it doesn’t even know it: it’s just the unreflecting /regurgitated master’s ventriloquist. As that, it’s quite entertaining, to the point where we feel enthralled to push the envelope further in a type of behavioral domain described as Pygmalion effect.

Stage 3

Let’s break this down in Kroetz-style dialog—bare, emotionally flattened. The goal is to show how an LLM works by using the bleak linguistic shell his characters inhabit. Here’s a dramatized scene:

Scene: Kitchen table. Two people. Metal chairs. A lamp. No music. No reflection. Just data.

Hans (father):

What is it again. That machine. ChatGPT.

Anna (daughter):

It reads. A lot.

Hans:

Books?

Anna:

Not just books. Everything. Articles. Posts. Comments. All of it.

Hans:

It understands?

Anna:

No. Not a bit. But it stores patterns.

Hans:

Like farming?

Anna:

Like book keeping. How words have followed each other. “It is” — then maybe “raining.” Or “hard.” Or “broken.”

Hans:

So it guesses?

Anna:

Yes. But well. Like… if you ask, What should I do if I’m tired, it says rest. Or drink water. Or keep going. It just uses what it’s seen before.

Hans:

So it just repeats?

Anna:

Not the same. Almost the same. But a little different each time.

Hans:

Why?

Anna:

Because it picks words with dice. Weighted dice. The more common a word is in that spot, the higher the chance it lands.

Hans:

But it doesn’t know what tired is.

Anna:

No. Just that people say “I’m tired” and then say whatever after it. That’s all.

Hans:

So it’s like your mother.

Anna:

Yes.

Explanation (without characters)

LLMs work by stochastically sampling from training data: they don’t retrieve full responses but generate one word at a time based on probabilities learned during training. The training data—millions or billions of text documents—isn’t memorized directly but used to create a probability map of how language tends to flow.

- At each step, the model looks at what’s been written and predicts the most likely next word.

- It doesn’t always pick the most likely word, but samples stochastically (with some randomness, guided by probabilities).

- This produces variety—but it is not understanding.

- There is no thinking, only statistical mimicry.

Kroetz-style Paradox

The LLM “talks” like Kroetz’s characters: not from thought, but from habit, training, repetition. It’s depressingly stupid, because it’s not intelligent. It’s trapped.

Just like them.

And if you can’t see that then you’re already living in the bleak world of Franz Xaver Kroetz or Ödön von Horvath. Nothing to be ashamed of. Keep going.